Pytorch Implementation for Convolutional Neural Network Sentence Classification

This is a pytorch implementation for Convolutional Neural Networks for Sentence Classification.

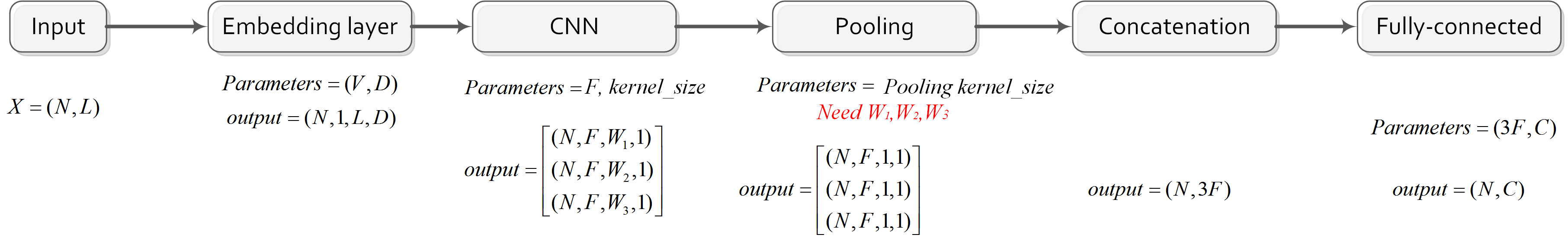

Dimension Analysis for Netwrok Parameters. Dimension analysis is useful for Deep Learning. Especially, we need set up some parameters based on the output of previous layers. In this example, we need to set pooling kernel sizes based on output of CNN layer. Thus, how to correctly calculate `W_1, W_2` and `W_3` is an important step in following example.

Layers:.

1. Input. The input \(X\) is the mini-batch. `X = (N,L)`, where `N` is the size of mini-batch, `L` is the sentence length (i.e., number of words).

2. Embedding layer. x = self.embed(x) . Input: `(N,L)`. Parameters: `(V,D)`. Output: `(N,L,D)`. For 2-D convolution, we reshape output to `(N,1,L,D)`.

3. CNN layer. x1 = F.relu(self.conv13(x)). Input: `(N,1,L,D)`. Parameters: nn.Conv2d(self.Cin, self.Cout, (3, self.D)) , where self.Cin=1, self.Cout=`F` is the number of filters, 3 and self.D is kernel_size. Output: `(N,F,W_1,1)`. The most important step is to calculate `W_1`, where `W_1 = 1 +(L + 2*pad - 3 )` and `1 =1+ (D + 2*pad - self.D )`. Because there are different kernel sizes, so the output should be `(N,F,W_1,1)`, `(N,F,W_2,1)`, and `(N,F,W_3,1)`.

4. Pooling layer. x1 = self.pool3(x1) . Take kernel_size ([3, self.D]) as an example, Input: `(N,F,W_1,1)`. Parameters: nn.MaxPool2d(kernel_size=[poolsize3,1], stride= 1) , where poolsize3`=W_1`. Because there are 3 kernel sizes, thus output: `(N,F,1,1)`, `(N,F,1,1)`, and `(N,F,1,1)`.

5. Concatenation layer. Input: `(N,F,1,1)`, `(N,F,1,1)`, and `(N,F,1,1)`. Output: `(N,3F)`.

6. Fully-connected layer. Input: `(N,3F)`, Parameters: `(3F,C)`, where `C` is the number of classes, Output: `(N,C)`.

Illustration of input and output:

Code

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

class CNN_Sentence_Classification(nn.Module):

def __init__(self, args):

super(CNN_Sentence_Classification, self).__init__()

self.args = args

self.V = args.embed_num

self.D = args.embed_dim

self.C = args.class_num

self.Cin = 1

self.Cout = args.kernel_num

self.Ks = args.kernel_sizes

self.L =args.sent_max

self.embed = nn.Embedding(self.V, self.D)

self.conv13 = nn.Conv2d(self.Cin, self.Cout, (3, self.D))

self.conv14 = nn.Conv2d(self.Cin, self.Cout, (4, self.D))

self.conv15 = nn.Conv2d(self.Cin, self.Cout, (5, self.D))

poolsize3 = 1+(self.L - self.Ks[0])

self.pool3 = nn.MaxPool2d(kernel_size=[poolsize3,1], stride= 1)

poolsize4 = 1 + (self.L - self.Ks[1])

self.pool4 = nn.MaxPool2d(kernel_size=[poolsize4, 1], stride=1)

poolsize5 = 1 + (self.L - self.Ks[2])

self.pool5 = nn.MaxPool2d(kernel_size=[poolsize5, 1], stride=1)

self.dropout = nn.Dropout(args.dropout)

self.fc1 = nn.Linear(len(self.Ks) * self.Cout, self.C)

def forward(self, x): # (N,L)

x = self.embed(x) # (N,L,D)

k1,k2,k3 = x.size()

x = x.view([k1,1,k2,k3]) # (N,1,L,D)

x1 = F.relu(self.conv13(x))

x1 = self.pool3(x1)

x2 = F.relu(self.conv14(x))

x2 = self.pool4(x2)

x3 = F.relu(self.conv15(x))

x3 = self.pool5(x3)

x = torch.cat((x1, x2, x3), 1)

k1,k2,k3,k4 = x.size()

x = x.view(k1,k2)

x = self.dropout(x) # (N,len(Ks)*Co)

logit = self.fc1(x) # (N,C)

return logit